Run Your Own AI Chatbot Locally with Meta's Llama Model

Ever wanted to have your own AI chatbot running locally? With Meta's Llama model and Docker, you can set it up in just a few steps. Here’s how:

Prerequisites: Ensure Docker is installed on your machine. If you need to install Docker, follow the straightforward guide available at the Docker Docs.

Step 1: Set Up the Docker Container Open your terminal and execute the following command to create and run the Ollama container:

docker run -d -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama

This command downloads the Ollama image and runs it as a detached container, mapping the necessary ports and volumes.

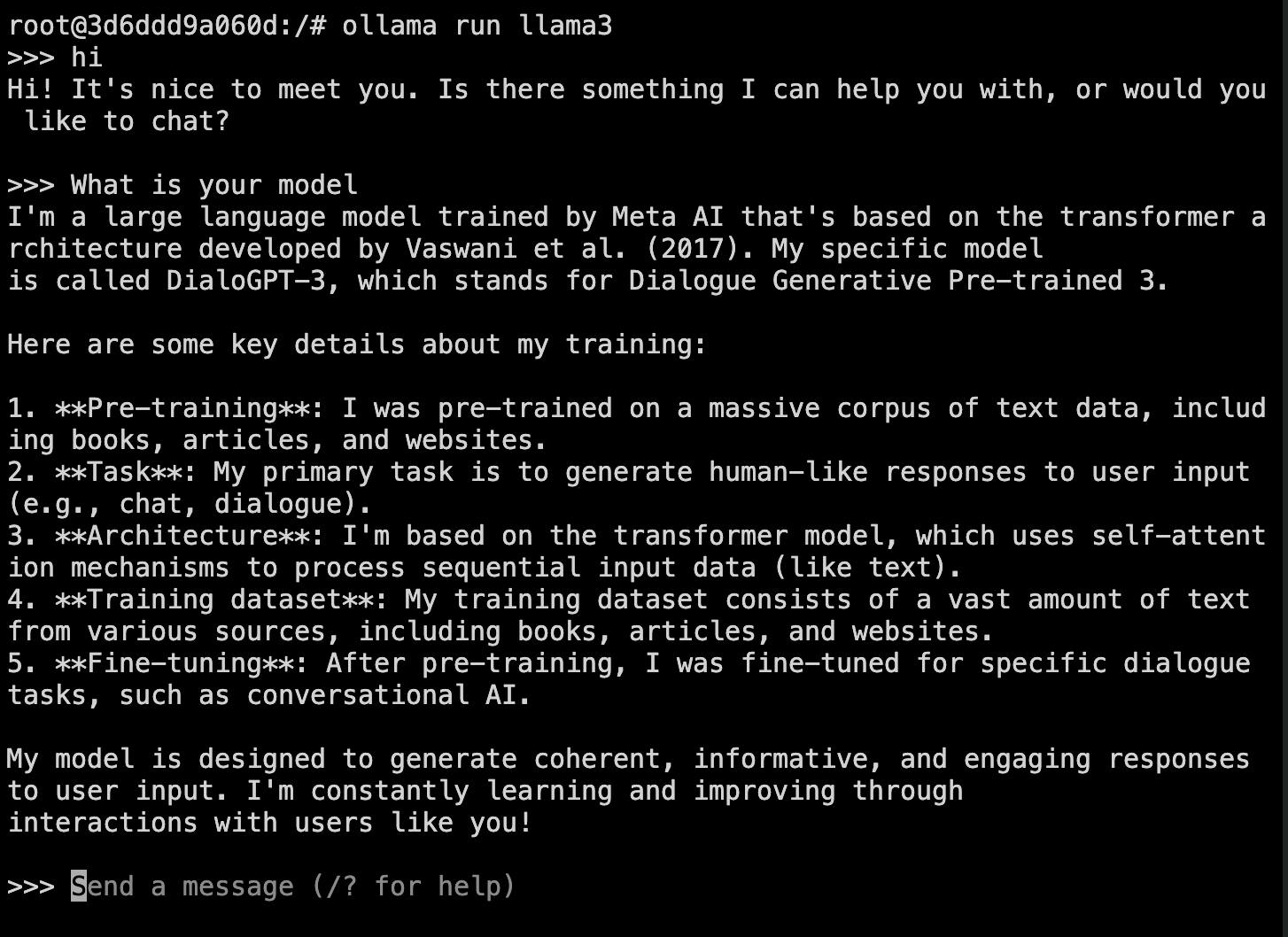

Step 2: Access the Chatbot Interface Once the container is active, use this command to access the shell, load your preferred Llama model, and initiate the chatbot interface:

docker exec -it ollama ollama run llama2

You can choose between llama2 or llama3 based on the model you wish to deploy.

Congratulations! You now have a locally running AI chatbot.

Further Exploration: Dive into the Ollama documentation to discover how to use the API and experiment with other LLM models for your projects.

Reference Documentation: For more detailed information, refer to the Ollama Docker Image on Docker Hub.